Blogs

Sign-up to receive the latest articles related to the area of business excellence.

How do I transform my data to a normal distribution?

View All Blogs

When you sample data from a process, sometimes we want to use that data to make predictions about the process and the population. If the data comes from a controlled process that follows some “physical” nature, then the data from that process may follow a specific distribution otherwise the distribution may be random or varying all the time. The shape of a distribution provides clues to the process that generates the data. If we know the shape of the distribution, the properties of the distribution can be used to draw some inferences about its population. The shape of the distribution can be checked by drawing a histogram of the data. The distribution of continuous data can take many different shapes such as Normal, Uniform, Weibull, Exponential, and so on. Distributions may be skewed where one tail is longer than the other or symmetric. Distributions may be bounded on one or both sides or unbounded with any possible value. According to Central Limit Theorem, when several processes are mixed together in an additive fashion, then the resulting distribution is usually normal. However, a process distribution can take any shape. We cannot state that one distribution of the data is better – it just depends on the nature of the process. Some commonly used distributions and applications are shown in the table below.

Some of the statistical analysis assumes that the data is normally distributed, for example 1-sample t test, ANOVA, regression etc. This is because the normal distribution has some special properties which may have been used to derive the statistical properties. If the assumption of normality is not satisfied, then the results of the statistical analysis may be incorrect. Hence, when performing statistical analysis, we should always be aware if there are any assumptions about normality of data and if so, we need to check these assumptions before the use of the statistical analysis. Checking for the normality of the data was covered in a previous article. Let’s say that the data is not normally distributed. What can we do about it? How can we analyze this data using the appropriate statistical analysis? If the data is close to being normal, we may still assume it is normal and for mild departures from normality, the analysis may still give good results. However, there may be instances where the departure from normality may be significant. We will discuss three approaches to handle this situation.

Some of the statistical analysis assumes that the data is normally distributed, for example 1-sample t test, ANOVA, regression etc. This is because the normal distribution has some special properties which may have been used to derive the statistical properties. If the assumption of normality is not satisfied, then the results of the statistical analysis may be incorrect. Hence, when performing statistical analysis, we should always be aware if there are any assumptions about normality of data and if so, we need to check these assumptions before the use of the statistical analysis. Checking for the normality of the data was covered in a previous article. Let’s say that the data is not normally distributed. What can we do about it? How can we analyze this data using the appropriate statistical analysis? If the data is close to being normal, we may still assume it is normal and for mild departures from normality, the analysis may still give good results. However, there may be instances where the departure from normality may be significant. We will discuss three approaches to handle this situation.

Check for Outliers

The first thing we need to do check if the data is not normal because of any outliers. A normal data does not have any outliers – hence, if there are outliers in your data, then that may be the reason that the data is not normally distributed. First we need to check if the outliers in the data are because of any data entry errors. If so, we can correct the data and then check if the data is normally distributed. If there are no data entry errors, the next question to ask is if the outliers are because of some special causes which are not going to recur in the future. If so, it may be okay to note the reasons and then delete these outliers. However, if these outliers have a chance of recurring in the future then it would not be appropriate to just blindly delete them from analysis. We need to look for other ways of handling this data.Box-Cox Transformation

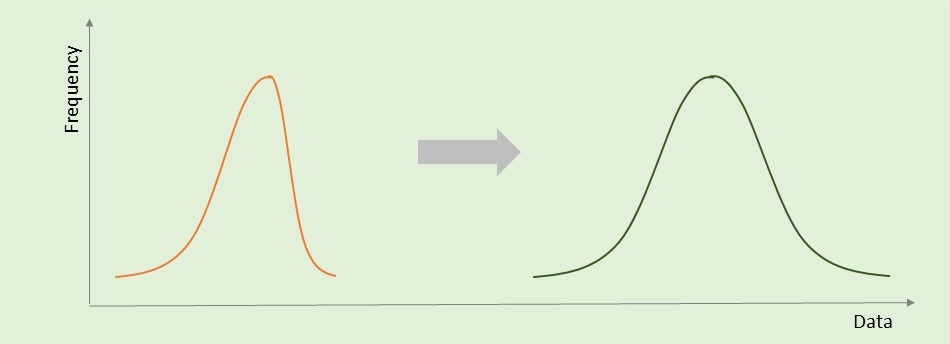

The second approach is to transform the data such that the transformed data is normally distributed. There are some transformations that have been found to make the transformed data normal. For example, if you square the data values, the squared values may be normal. Or, in some cases, the square root of the data or the reciprocal of the data may be normally distributed. In other cases, the logarithm of the data may be normally distributed. Such simple transformations of the data to make the data normal can be grouped together under a transformation called the Box-Cox transformation. The Box-Cox transformation is given by the following general formula: Where, x is the raw data and y is the transformed data and lambda is the transformation constant. If lambda = 1, then there is no transformation. If lambda = 2, then it is the square transformation and so on. The following table provides the names of some standard transformations:

Where, x is the raw data and y is the transformed data and lambda is the transformation constant. If lambda = 1, then there is no transformation. If lambda = 2, then it is the square transformation and so on. The following table provides the names of some standard transformations:

How to Fit the Box-Cox Transformation

There are several approaches to determine the value of lambda for the Box-Cox transformation. The most commonly used approach is to use the Most Likely Estimate (MLE) approach. Getting into the details about this approach is out of scope of this article. A simple approach to determine the value of lambda is to vary the value of lambda from -5 to +5 and then determine which value of lambda produces a distribution that is as close to a normal distribution as possible. The value of lambda is selected that provides the smallest value of the standard deviation of the variation between the transformed data and a normally distributed data. Of course, lambda can take any value (say 1.63), but it may be hard to explain what that means to others. Some users like to choose the Box-Cox transformation to the values of lambda shown in the above table so that the transformation can be easily understood by the users. Note that there is no guarantee that a Box-Cox transformation will always result in a normal distribution. It is possible that none of the values of lambda can result in a normally distributed data.Johnson Transformation

A third approach to transform the data to a normal distribution is to use another type of more complex transformation called the Johnson family of transformations. There are three different families of Johnson distributions: Where, Y is the transformed data, X is the raw data, and eta, epsilon, and lambda are the Johnson parameters. Decision rules have been formulated for the selection of the appropriate Johnson family of distributions SU, SB, and SL. There are several algorithms available to fit the Johnson parameters for a given data set. However, due to complex nature of these algorithms, the solutions are not very straightforward and require the use of appropriate software to estimate these parameters. Similar to a Box-Cox transformation, a computer can run through several combinations of these Johnson parameters to determine which set of parameters makes the transformed data as close to normal as possible.

Since there are several parameters to fit the Johnson transformation, we usually find that a Johnson transformation does a better job of transforming the data to a normal distribution compared to a Box-Cox transformation. Similar to the Box-Cox transformation, there is no guarantee that a Johnson transformation will be successful in transforming a data to the normal transformation.

It should be pointed out that when you transform the raw data using one of these transformations, the specification limits also need to be transformed if you need to calculate the process capability.

Where, Y is the transformed data, X is the raw data, and eta, epsilon, and lambda are the Johnson parameters. Decision rules have been formulated for the selection of the appropriate Johnson family of distributions SU, SB, and SL. There are several algorithms available to fit the Johnson parameters for a given data set. However, due to complex nature of these algorithms, the solutions are not very straightforward and require the use of appropriate software to estimate these parameters. Similar to a Box-Cox transformation, a computer can run through several combinations of these Johnson parameters to determine which set of parameters makes the transformed data as close to normal as possible.

Since there are several parameters to fit the Johnson transformation, we usually find that a Johnson transformation does a better job of transforming the data to a normal distribution compared to a Box-Cox transformation. Similar to the Box-Cox transformation, there is no guarantee that a Johnson transformation will be successful in transforming a data to the normal transformation.

It should be pointed out that when you transform the raw data using one of these transformations, the specification limits also need to be transformed if you need to calculate the process capability.

Example

Let’s say that we need to determine if a delivery process is in control. The primary metric of interest is the time to deliver an order from placement in the system till the order it signed and received by the customer. One of the ways to do this is to develop a control chart. In this exercise, since the primary metric is continuous and data is collected individually (subgroup size of 1), the appropriate chart to use is the I-MR chart. I-MR chart assumes that the data needs to be normally distributed. The delivery time data for this example are: 22, 76, 10, 2, 3, 6, 7, 5, 6, 2, 8, 1, 3, 5, 63, 4, 22, 13, 4, 12. We first draw the histogram of the data and check for the normality of the data. Looking at the histogram and reviewing the P-value, it is clear that the data is not normal. The next step is to check for any data entry errors or the reason for the outliers – let’s say we have investigated them and did not find any issues with this data. The next step is to transform the data. Let’s try to use a Box-Cox transformation. The results of the Box-Cox transformation are shown in the following figure. The P value of the raw data was <0.001 (not-normal) and after the transformation, the P value is 0.381 (normal)

A Johnson transformation is also shown in the figure below. From the transformed data, it is clear that the data is transformed into a normally distributed data. The P value of the transformed data is 0.99 (normal). We can see that the Johnson transformation did an excellent job of transforming the data to a normal distribution. We can now plot the I-MR chart for this transformed data which shows that the process is in control (this topic is out of scope of this article).

A Johnson transformation is also shown in the figure below. From the transformed data, it is clear that the data is transformed into a normally distributed data. The P value of the transformed data is 0.99 (normal). We can see that the Johnson transformation did an excellent job of transforming the data to a normal distribution. We can now plot the I-MR chart for this transformed data which shows that the process is in control (this topic is out of scope of this article).

Follow us on LinkedIn to get the latest posts & updates.